The mantra “Data is the new oil” was officially retired. It was a crude metaphor of a brief period. Data in 2026 is no longer a thing you find; its a resource you develop.

In 2026 we are entering the era of artificially generated data the technique and the science behind creating information using algorithms that are similar to what is found in the real world but entirely synthetic.

In the past synthetic data generation was an obscure security tool used by banks. Nowadays its the main fuel source for leading AI system. The autonomous cars that mimic billions of miles of edge cases within the “Omniverse”

to healthcare bots that are trained on fake records of patients in order to treat actual diseases The shift in technology is a fact. Gartners forecast has been realized that by 2026 more than 75% of the data that is used for AI creation is artificial.

But the rapid expansion of these devices has led to a tangled environment. Engineers are making crucial mistakes those which resemble poor skincare routine.

They apply “heavy creams” (massive models) for simple issues and ignoring all the “ingredients” (source bias) using products for too long to the point that their models experience the digital version of chemical burns namely Model Collapse.

This is the guide to your ideal dermatologist in the age of data. This guide is your ultimate source to learn how to master the art of synthetic data creation by 2026 without destroying your “skin barrier” of your AI infrastructure.

Part 1: The Anatomy of Fabrication

For a better understanding of the concept of synthetic data generation by 2026 it is necessary to look beyond the basic GANs (Generative Adversarial Networks) that were used in the previous. Technology has advanced to an ecosystem that is multi layered.

1. The Generative Engines

The engine room has changed. Although GANs remain utilized for high definition imaging synthesized data generation for textual and tabular data has been replaced by transformer based structures and Diffusion models.

- Tabular Transformers The HTML0 Tabular Transformers treat the rows like sentences understanding what is the “grammar” of your customer database to create anonymous rows that keep complex relationships (e.g. age and. income or. the credit score).

- Agent Based Simulation It is the hottest thing to watch in 2026. Instead of simply creating static files it is now possible to simulate whole groups that comprise AI agents. If youre in need of transaction information then you cant copy the old logs you create thousands of “customer agents” with distinct personality and allow them to “shop” in a virtual store. They will generate clean detailed behavior information.

2. Differential Privacy (The SPF)

By 2026 synthesized data creation is not separate from privacy. The standard is “Epsilon Differential Privacy.” The mathematical guarantees ensure that the data of a single person cannot be altered by reverse engineering the output. Consider it the SPF 50 in your data pipeline. It blocks dangerous rays of re identification but lets the valuable “vitamin D” of statistical understanding pass through.

3. The Validation Layer

It is not safe to trust generators completely. The validation layer evaluates its output to actual facts (the “Ground Truth”) with metrics that include:

- Marginal Distribution Fidelity Do the columns appear identical?

- Correlation Integrity Have we mistakenly broken the connection that binds “smoking” and “lung cancer”?

- Privacy Leakage Risk An “red team” attack on the information to determine the possibility of real time records being obtained.

Part 2: The Titans of 2026 Top Tools and Platforms

The market has become consolidated to some heavy hitters with each of them offering their own taste of artificial technology for data creation.

1. Gretel.ai (The Estee Lauder of Data)

Gretel is still the benchmark for developer friendly synthetic generation of data.

- is ideal for: General purpose tabular text and data that is relational.

- 2026 update: Gretel Navigator is now supports “Text to Dataset. ” Simply type in “Generate an array that contains 5000 insurance claims related to flood damage that occurred within Florida” and the LLM driven engine creates the schema and populates the rows for you automatically.

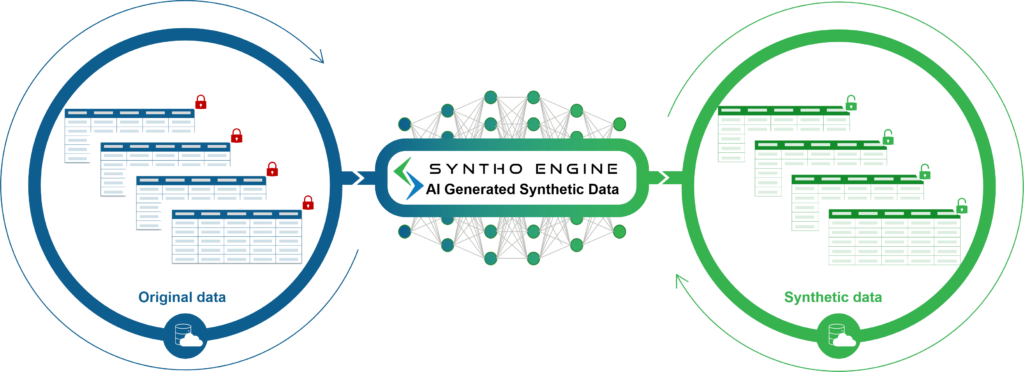

2. Syntho (The Clinical Specialist)

Syntho has carved out a niche within highly regulated sectors such as banking and healthcare.

- is ideal for: High fidelity tabular data which is in strict compliance with GDPR/AI Act.

- The Superpower of HTML0: Their “Smart Noise” injection can help make a trade off between privacy and usefulness better than every other program.

3. NVIDIA Omniverse / Cosmos (The Physical World)

When it comes to robotics and autonomous systems NVIDIA is the king.

- Ideal for: Physical as well as visual synthesized data generation.

- Use Cases: Training a robot to fold laundry using simulations of thousands of scenarios for folding shirts within a physics compliant virtual environment before it ever comes in contact with an actual piece of fabric.

4. Mostly.ai (The Enterprise Workhorse)

An experienced player in the field Mostly.ai excels at handling large complicated legacy databases.

- Ideal for: on premise deployments that require the data is not able to leave the firewall of the bank.

- 2026 update: The native software supports reference integrity across multiple tables making sure that the time the create “Orders” and “Customers” they will still coincide perfectly.

Part 3: The “Skincare” of Data Engineering

Whats the difference between the synthetic generation of data with skincare? Both require a equilibrium. The goal is to enhance the quality of your surface (the data) while not compromising the base structure (the actuality of statistics). By 2026 the majority of errors will be surprisingly insignificant.

1. Picking “Heavy Creams” (The Over Engineering Error)

When it comes to skincare putting an oil based thick moisturizing cream on skin that is oily can cause breakouts. When it comes to AI its about making use of massive computationally heavy models that generate easy task.

- A Problem: using a model with 70 million parameters LLM or a sophisticated Diffusion model for synthesized data generation to create a basic Excel spreadsheet.

- The result: You burn thousands of dollars on GPU expenses and create massive delay. The result is that you “clog the pores” of your pipeline.

- The Solution: Use “lightweight lotions.” for simple data in tabular form using it is recommended to use a Bayesian Network or a Gaussian Copula tends to be quicker as well as cost effective and better than the Transformer. Do not use a flamethrower for ignite a candle.

2. Ignoring Ingredients (The Source Bias Error)

The last thing you would do is apply a substance without first confirming if youre sensitive to its ingredients. But scientists often test artificial data generation using “dirty” source data.

- The error: Training a generator with historical employment data which contains bias towards gender.

- The Result: The generator learns the bias and then amplifies it. It is an artificial dataset that appears “clean” but statistically discriminates on women. This is known as the “hidden allergen” of AI.

- The Solution: Audit your ingredients. Process your actual data beforehand in order to adjust groups prior to the time you can synthesize. Make use of this generator in order to correct the imbalance (e.g. the oversampling of or underrepresented groups) instead of replicating the bias.

3. Overusing Products (The “Model Collapse” Error)

Applying a retinol based peel every night is a way to ruin your skin barrier. Similar to training models repeatedly with artificial generated data outputs destroys”the truth barrier. “truth barrier.”

- An Error the “Ouroboros” impact. Model A is trained Model A on synthetic data. Model A creates new data. It is then used to train Model B on that data. After Generation C The model has lost connection to reality. The variances are smoothed out while the tails of distribution vanish and the model morphs into a white average.

- The consequence: Your AI becomes sure but it is not right. Its unable to deal with edge cases since the data generated by synthetic algorithms have “smoothed” them away.

- The Fix: The “Sandwich Method.” Always ensure that your training is anchored by a fresh layer of verified by a human “Ground Truth” data. Dont let your model feed run completely rely on itself.

4. Skipping the Patch Test (The Validation Error)

It is not recommended to apply new chemical to your face without trying a small patch before you apply it to your entire face. By 2026 the use of artificial data generation with no rigorous testing will be considered an act of professional naivety.

- An Error The generation of 10 million rows and then feed them directly into a production model due to “it looked okay” in an example.

- The Result: A “production breakout.” The simulation fails in real world conditions because the artificial data did not recognize an important connection (e.g. that it separated “high RPM” from “engine failure”).

- The solution: Automate the patch test. Each artificial data generation process must go through several tests of statistical validity (Kolmogorov Smirnov test and correlation matrixes) prior to being made available for release.

Part 4: Use Cases Where Synthetic Data Wins

The ability to determine the best place to use the synthetic generation of data is as vital as understanding how to create it.

The “Cold Start” Problem

It is your first time launching a technology driven app for fintech. There arent any users therefore no evidence to train an automated Fraud detection AI.

- solution: Use Agent Based artificial information generation to create a simulation of 10000 people making transactions. Launch with the help of a well trained brain rather than a blank one.

The “Unshareable” Data

Youre a hospital researcher. Youd like to exchange patient information with an academic institution for research in cancer However HIPAA does not permit it.

- Solutions: Make a totally artificial “twin” of your patient database. It keeps the statistical connection to “smoking” and “tumor size” but does not include any actual patient.

The “Edge Case” Simulation

It is your goal to build an autonomous car. It runs well in sunny weather However there is no information about “blizzards at night with a deer jumping out.”

- Solutions: You cannot wait for this to occur. It is necessary to use the synthetic generation of data to simulate this situation for 50000 times through an online simulator for training your vision system.

Part 5: Step by Step Implementation Guide

Do you have the right equipment to create your manufacturing facility? to unlocking the secrets of human behavior is the roadmap for 2026 to build the synthetic generation of data.

Step 1: Define the “Truth”

Which does the original source? Is it a CSV? It could be a database? Is it a stream? It is important to define the schema clearly.

- Action Cleaning the original data. Get rid of “dirty ingredients” like duplicate rows or clear formatting errors.

Step 2: Choose the Generator (The Formula)

- Tabular Data? Use Gretel or Syntho.

- Images? Use Stable Diffusion variants.

- Complex Logic? Use Agent Based Simulation.

Step 3: Train & Synthesize

Test the simulation. Start with small amounts creating 10% of the desired volume at first.

Step 4: The “Patch Test” (Validation)

Check the Quality Assurance report.

- Privacy Examine: Is the “Distance to Closest Record” (DCR) is it secure?

- Utility Test: If you train an Random Forest on the synthetic data will it be able to predict similarly to one based with actual data?

Step 5: Post Processing (The Toner)

Fine tune the output.

- Business rules: Ensure no “negative ages” or “start dates after end dates” are present. Synthetic data generation models occasionally make errors that seem logical and require rule based programming to correct the problem.

Step 6: Integration

The data will be piped in the MLOps pipeline. Then treat it as real data. Version control is controlled with tools like DVC (Data Version Control).

+1

Part 6: Future Trends Beyond 2026

The sector in synthesized data creation is growing rapidly.

1. Generative “On the Fly”

The static datasets will go away. As time goes on new the data will be created as part of the training. The algorithm will ask for “more examples of credit card fraud” as well as the generator of synthetics will spin the data in milliseconds before inputting them directly into the loop for training.

2. The “Watermarked” Web

In order to stop Model Collapse the internet of 2027 is expected to be split. Content created by humans will be encrypted. Synthetic data generation tools will tag automatically their outputs which will allow future algorithms to differentiate the difference between “organic” and “processed” information.

3. Privacy Preserving LLMs

Well see LLMs which can only talk in artificial data. Theyll be able to learn from confidential corporate data but “lobotomized” so they can not output real names or secret name but only synthesized generalizations.

Conclusion

Synthetic data generation is the fuel which will keep the engines of AI operating until 2026. Since real world data is becoming scarcer costly expensive and also legally risky to use The ability to create authentic fake data of high quality becomes an extremely powerful tool.

However remember the lesson on skincare. Its not about to have more information; its healthful data. Do not use the heavier creams of the over engineering. Be mindful of the components in the source distribution. Never never skipping the test of patch.

When you master artificial data generation by mastering synthetic data generation youre not only faking it. You create it. It is building a new future in which AI advancement is quicker more secure safe and better scalable.

- Synthetic Data Generation: The Ultimate Master Guide 2026

- GitHub Copilot Master Guide 2026: The Ultimate AI Coding Handbook

- How Accurate Are AI Content Detectors Master Guide 2026

- Cloud Native Database Architecture Step by Step for Beginners Master Guide 2026

- Ai Code Generation Tools: Step by Step for Beginners Master Guide 2026